Discover the Potential of Your Process Data.

We help manufacturers in the process industry to find new ways to enhance the efficiency and sustainability of their continuous and batch production.

Our solution

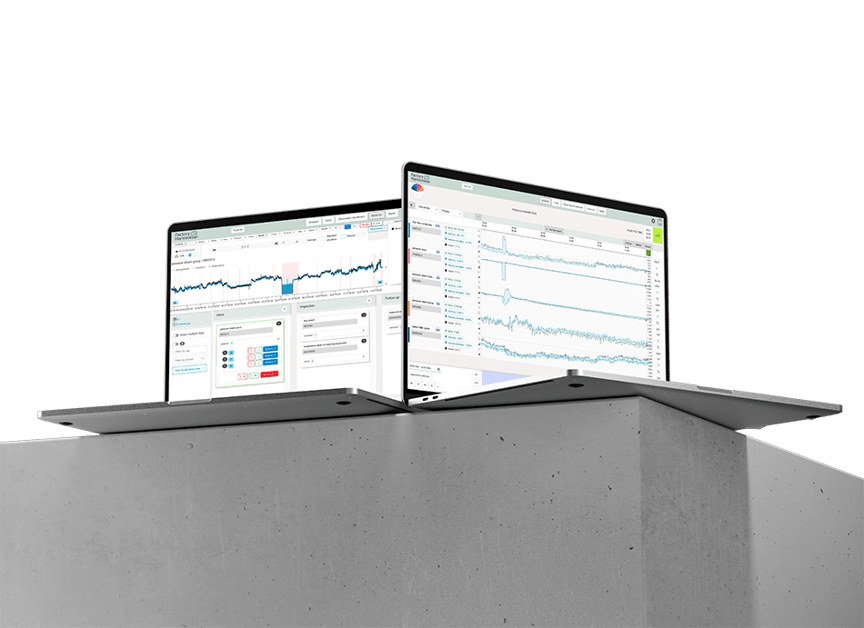

Factory Harmonizer is a process manufacturing analytics platform that provides actionable insights for your operators and engineers. Our uniqueness lies in our approach: leave the data engineering to us and focus on the improvements.

Achieve

Transparency

Gain a clear view of every aspect of your production, allowing your operators and engineers to make quick, informed decisions.

Improve

Yield

Identify opportunities to optimize processes and increase your output without compromising quality.

Boost

Efficiency

Streamline your workflows with data-driven insights that enhance production efficiency.

Enhance

Sustainability

Reduce waste, minimize emissions, and optimize resource utilization, to ensure efficient and responsible production practices.

increased annual production profit

new annual energy savings for one production line

average payback time

“Factory Harmonizer supports the expertise of operators in decision-making when optimizing process performance.”

FH Harmony

Boost production stability and efficiency by monitoring and identifying process deviations that go beyond your regular check-ups. Made for complex processes.

FH Cycle

Streamline batch process control with real-time insights into the condition of your current and past batches. Enhance process efficiency and ensure optimal product quality.

FH Soft sensors

Get continuous values for challenging or costly variables such as lab measurements to save time and resources and proactively control your process.

Enjoy Industry Insights

Transforming the mining and metals industry: The potential of machine learning and AI

Read article

Key Factors to Consider When Selecting an Analytics Provider for Your Production

Read articleSubscribe to our Insights

Fill in your email to receive insights from SimAnalytics about product updates, upcoming events, industry news, and other helpful content.